Die Entwicklung leistungsstarker KI-Agenten ist nicht das Endspiel. Wenn Sie dies lesen, haben Sie wahrscheinlich Agenten entwickelt, die selbständig denken, handeln und sogar Werkzeuge verwenden können. Vielleicht leisten sie bereits beeindruckende Arbeit. Wenn Sie diesen Agenten jedoch in der Produktion vertrauen wollen, ergeben sich neue Herausforderungen, die über die Sicherstellung der "Funktionsfähigkeit" des Agenten im Labor hinausgehen.

Was passiert, wenn Ihre Agenten die Zustimmung eines Menschen benötigen, bevor sie eine riskante Entscheidung treffen? Können Ihre Agenten lange Laufzeiten, Ausfälle oder Neustarts überstehen, ohne ihren Platz zu verlieren? Und was braucht es, um Agenten zu unterstützen, die nicht nur Skripte auf einem Desktop sind, sondern komplexe Systeme, die über Cloud-Plattformen und verteilte Umgebungen laufen?

Diese Art von Fragen hat uns bei SnapLogic dazu veranlasst, die Verwaltung des Agentenstatus zu überdenken. Die Antwort: Agent Continuations, ein neuer Mechanismus, der Agenten-Workflows wirklich wiederaufnehmbar, zuverlässig und kollaborativ macht.

Welchen Platz nimmt der Mensch ein?

Die Automatisierung von Agenten hat enorme Vorteile, aber die meisten Unternehmen brauchen Leitplanken. Bei risikobehafteten Aufgaben wie Geldtransfers, Kontenlöschungen oder größeren Änderungen an kritischen Systemen wünschen sich die Mitarbeiter einen Überblick. Wir nennen dies Mensch-im-Kreislauf Entscheidungsfindung.

Sie wollen nicht, dass ein Agent einen risikoreichen Schritt überspringt, ohne dass ein Mensch grünes Licht gibt. Gleichzeitig möchten Sie nicht, dass der gesamte Arbeitsablauf des Agenten unterbrochen wird, nur um auf die Genehmigung zu warten, vor allem, wenn Sie team- oder zeitzonenübergreifend koordinieren.

Langlebige Agenten und das Problem des Scheiterns

Je anspruchsvoller Ihre Agenten werden, desto wahrscheinlicher ist es, dass sie stundenlang (oder sogar tagelang) laufen und dabei viele Entscheidungen treffen. Lang laufende Prozesse sind jedoch ein Magnet für Fehler. Netzwerkpannen, Hardwareprobleme, API-Limits oder Unterbrechungen von Cloud-Diensten können den Fortschritt zunichte machen, wenn Sie nicht aufpassen.

Die meisten Frameworks gehen heute davon aus, dass die Hauptschleife des Agenten einfach weiterläuft, bis sie fertig ist. Aber in der Praxis kann man das nicht immer garantieren. Wenn das System ausfällt, muss Ihr Agent dann bei Null anfangen? Oder kann er genau dort weitermachen, wo er aufgehört hat?

Die neue Welt: mehrstufige, verteilte Agenten

Die Agentenarchitekturen entwickeln sich weiter. Anstelle eines großen Agenten gibt es häufig Orchestratoren, die die Arbeit an Subagenten delegieren, die jeweils über eigene Aufgaben und Toolkits verfügen. Diese Sub-Agenten können auf verschiedenen Servern oder Cloud-Plattformen laufen oder sogar über APIs wie Slack oder Web-Apps interagieren.

Diese Komplexität vervielfacht das Risiko einer Unterbrechung und macht es noch wichtiger, eine zuverlässige Möglichkeit zu haben, die Ausführung von Agenten anzuhalten, aufrechtzuerhalten und fortzusetzen, insbesondere wenn Menschen eingreifen müssen oder wenn externe Abhängigkeiten involviert sind.

Agentenfortsetzungen eingeben

Um diese Herausforderungen zu lösen, haben wir uns von der Theorie der Programmiersprachen inspirieren lassen - insbesondere vom Konzept der Fortsetzungen. In der Programmierung fasst eine Fortsetzung alles zusammen, was man wissen muss, um eine Berechnung zu unterbrechen und später an einem bestimmten Punkt wieder aufzunehmen.

Agentenfortsetzungen tun dasselbe für KI-Workflows:

- Bei jedem Schritt im Prozess eines Agenten können Sie einen "Schnappschuss" seines gesamten Zustands machen, einschließlich der Tools, die er gerade aufrufen wollte, seiner ausstehenden Aktionen und sogar des verschachtelten Zustands von Unteragenten.

- Dieser Snapshot kann gespeichert, verschoben oder sogar an ein anderes System weitergegeben werden.

- Wenn Sie bereit sind, z. B. nachdem ein Mensch eine Anfrage genehmigt hat, können Sie die Fortsetzung zurücksenden, und der Agent macht genau da weiter, wo er unterbrochen wurde, ohne dass etwas dazwischen verloren geht.

Wie funktioniert das in der Praxis?

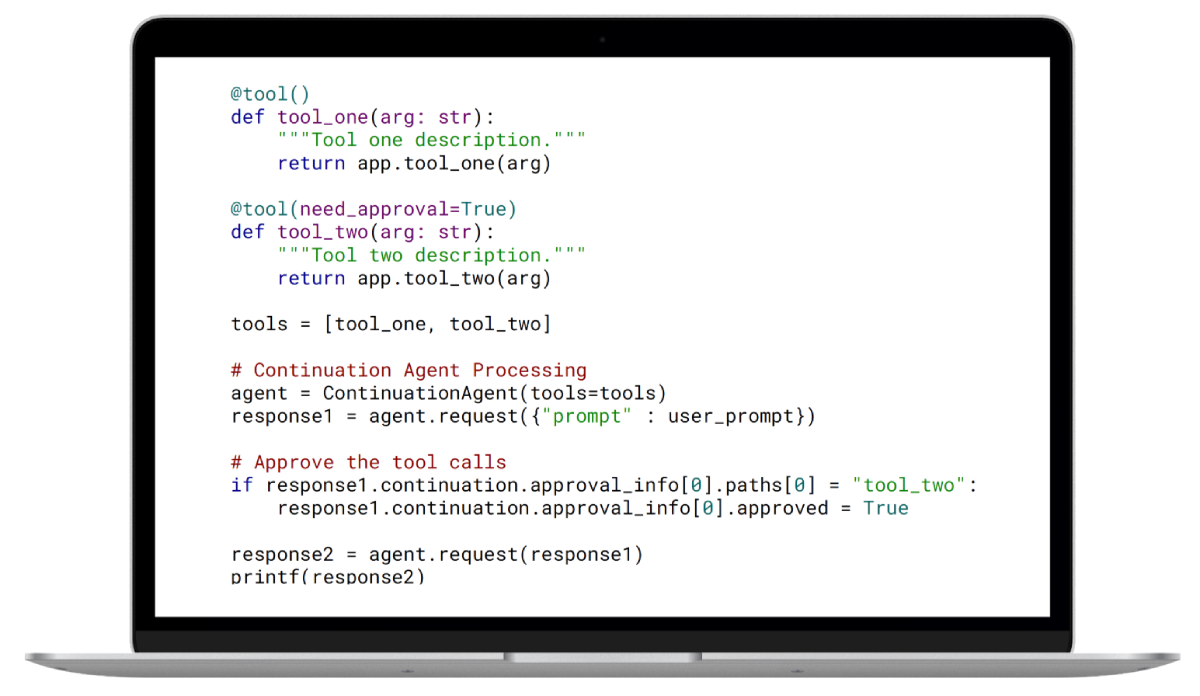

Jede Agenteninteraktion, jeder LLM-Anruf, jede Tool-Ausführung wird in einem strukturierten "Nachrichten-Array" aufgezeichnet. Dieses Array funktioniert wie ein Ereignisprotokoll, das die gesamte Konversations- und Entscheidungshistorie bis zum aktuellen Zeitpunkt enthält. Wenn eine Pause erforderlich ist (z. B. für eine menschliche Genehmigung oder weil ein Agent eine Ressourcengrenze erreicht hat), wird ein Fortsetzungsobjekt erstellt.

Dieses Fortsetzungsobjekt hält:

- Das Nachrichten-Array (die Erinnerung des Agenten an das Geschehene)

- Metadaten darüber, was wiederaufgenommen werden muss (z. B. welcher Werkzeugaufruf auf die Genehmigung wartet)

- Flaggen, um zu verfolgen, welche Schritte genehmigt oder bearbeitet wurden

Wenn Agenten verschachtelt sind, unterstützen Fortsetzungen beliebige Rekursionsebenen und erfassen nicht nur den Zustand des Hauptagenten, sondern auch die Zustände aller Unteragenten.

Sobald die erforderliche Aktion durchgeführt wurde (z. B. ein Manager genehmigt einen sensiblen Tool-Anruf), wird die aktualisierte Fortsetzung an den Agenten zurückgeschickt, der den gesamten Arbeitsablauf rekonstruiert und weiter vorantreibt.

Möchten Sie es in Aktion sehen? Sehen Sie sich unsere GitHub-Demo an.

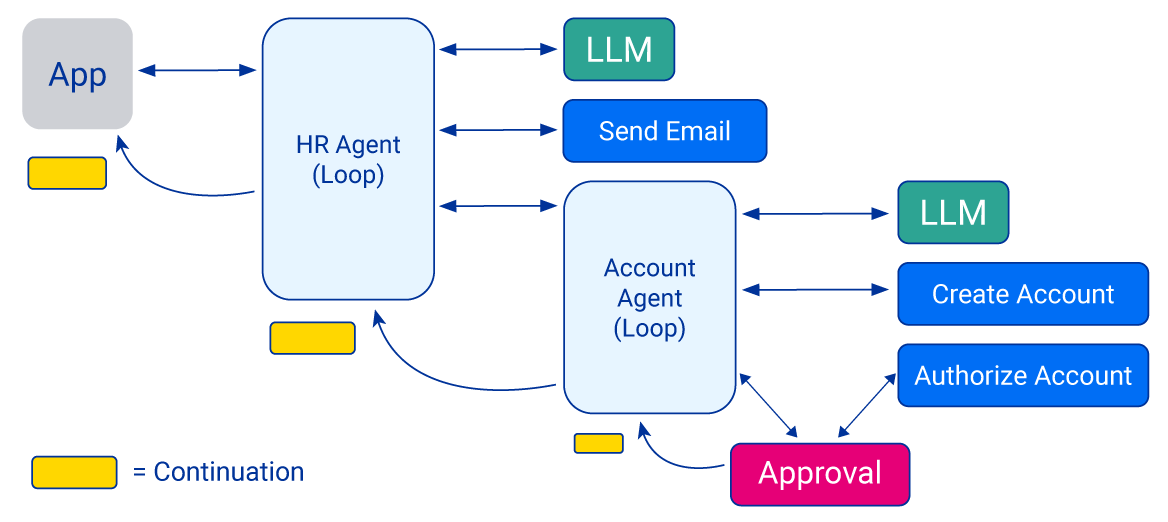

Beispiel aus der Praxis: HR Onboarding mit Agent Continuations

Nehmen wir an, Sie automatisieren einen HR-Onboarding-Workflow. Der Haupt-HR-Agent muss das tun:

- Erstellen Sie ein neues Benutzerkonto

- Kontoberechtigungen festlegen

- Senden Sie eine Willkommens-E-Mail

Die Autorisierung von Kontoprivilegien ist jedoch riskant und bedarf daher der ausdrücklichen Genehmigung durch einen Menschen.

Mit Agent Continuations hält der Workflow direkt beim Autorisierungsschritt an, bündelt den vollständigen Agentenstatus und sendet ihn zur Überprüfung zurück. Nach der Genehmigung wird der Workflow fortgesetzt, die Begrüßungs-E-Mail versendet und abgeschlossen - kein verlorener Fortschritt, keine manuellen Neustarts und kein Risiko, dass ein menschliches Versehen übersehen wird.

Warum das wichtig ist

Agent Continuations bietet Zuverlässigkeit und Flexibilität für fortschrittliche KI-Workflows.

- Die Agenten können so lange laufen, wie sie müssen, und sind gegen Ausfälle oder Neustarts gewappnet.

- Genehmigungen durch den Menschen werden zu Bürgern erster Klasse, nicht zu unangenehmen Umgehungslösungen.

- Komplexe, verteilte Agentensysteme können in verschiedenen Umgebungen pausieren und fortgesetzt werden - ob auf Ihrem Desktop, in der Cloud oder in mehreren Teams.

Andere Frameworks bieten zwar eine Zustandsverwaltung an, aber den meisten fehlt es entweder an einer nahtlosen menschlichen Genehmigung oder sie können nicht mit tief verschachtelten Agentenarchitekturen umgehen. Unser Ansatz, der aus dem SnapLogic AgentCreator Forschungsteam hervorgegangen ist, kombiniert beides.

Wo wir hinwollen

Wir haben Prototypen von Agent Continuations auf Basis der OpenAI Python API ( hier auf GitHub) und innerhalb der SnapLogic eigenen AgentCreator Plattform entwickelt. Wir arbeiten aktiv daran, diese Ideen auf breitere Szenarien auszuweiten:

- Aussetzen von Agenten aus Gründen, die nicht von Menschen genehmigt werden können (z. B. Warten auf asynchrone Ereignisse)

- Integration mit anderen Rahmenwerken und Standards

Wenn Sie daran interessiert sind, robuste, wiederaufnehmbare KI-Agenten zu erstellen, lade ich Sie ein, Agent Continuations auszuprobieren, oder wenn Sie visuelles Agentendesign in Aktion sehen möchten, werfen Sie einen Blick auf SnapLogic AgentCreator.

Abschließende Überlegungen

Bei der Einführung von Agenten in die Produktion geht es nicht nur darum, was sie tun können, sondern auch darum, wie sie sich erholen, anpassen und zusammenarbeiten. Mit Agent Continuations schaffen wir die Grundlage für KI-Systeme, die in der realen Welt überleben können - indem sie für Menschen eine Pause einlegen, wenn es darauf ankommt, und immer genau dort weitermachen, wo sie aufgehört haben.

- Möchten Sie den ganzen Vortrag sehen? Auf YouTube ansehen

- Code und Dokumentationen: github.com/SnapLogic/agent-continuations

- Visuelle Agentengestaltung: agentcreator.com