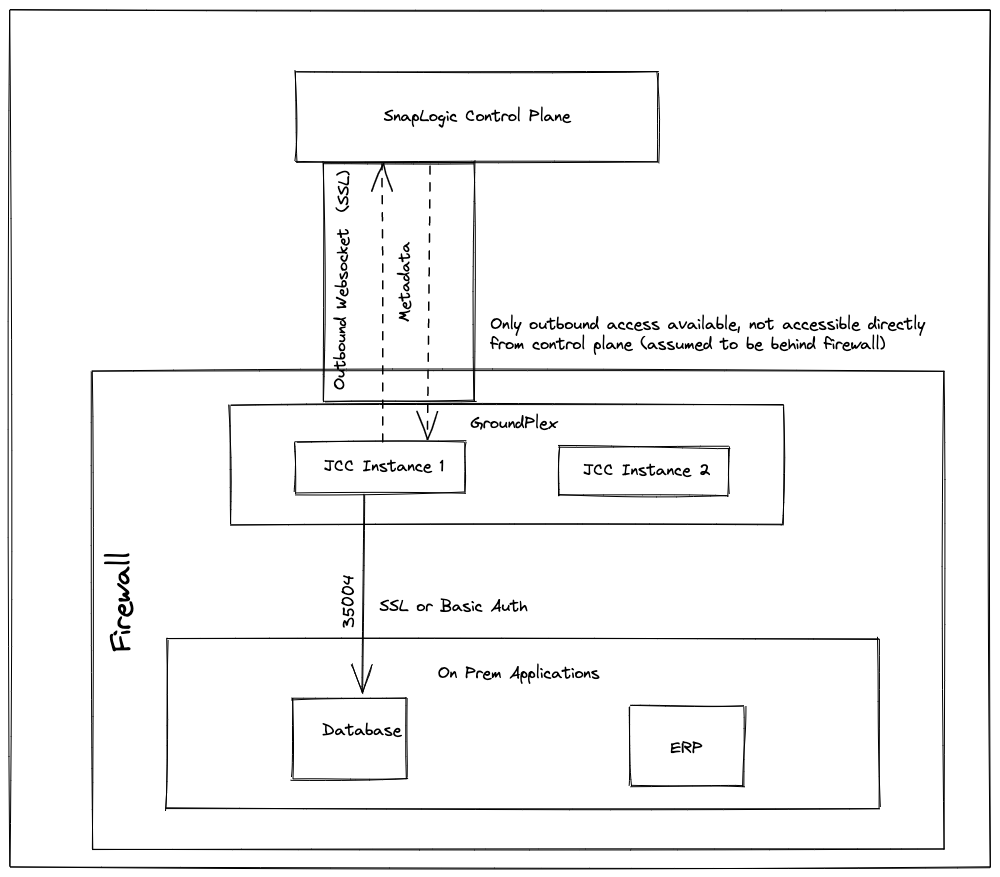

One of the most attractive value propositions of the SnapLogic platform is the ability to run our platform on-premises in either a data center or a cloud platform inside a private virtual network. However, being a cloud provider necessitates that the actual design work takes place in our design studio environment which results in a connectivity requirement between the node and the control plane where the pipelines run. The aim of this blog post is to provide a day-in-a-life of a Groundplex JCC node, how it is created, what are the requirements to run, and most importantly the network configuration requirements and best practices covering not just the how but also the why.

What’s in the Name: Snaplex JCC Node?

Simply stated, a Snaplex is a runtime. JCC stands for Java Component Container. It, as the name suggests, contains the code which runs all the pipelines and monitors the health of the node, utilization, and all aspects to keep it running smoothly. Essentially, there are two core processes running within the JCC, Monitor and Process.

Acquiring the Groundplex Node

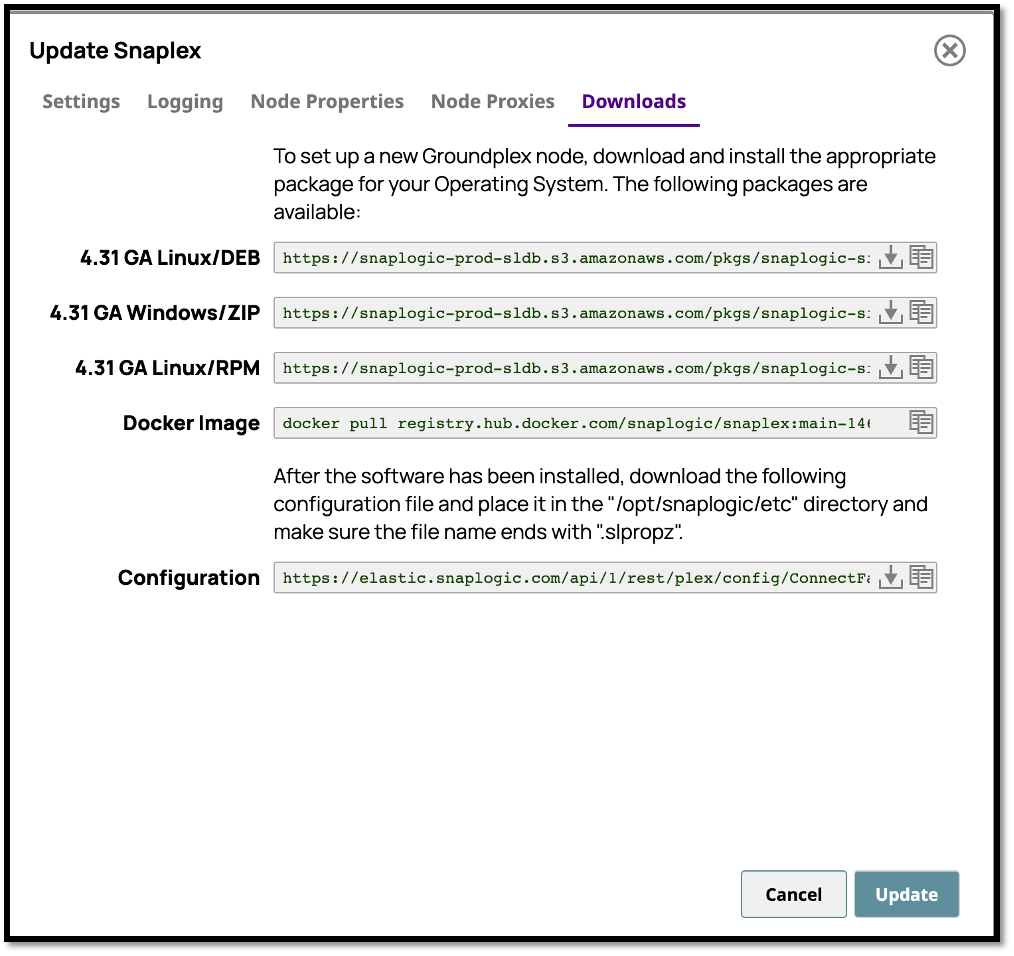

The SnapLogic Manager allows for the setup of a Snaplex node to serve as a runtime for processing pipelines on-prem. The options for a Snaplex node are as follows:

What Exactly Is in the Node?

The node is simply a WAR file or a compiled Java package. The most basic requirement to run the package is a JDK. The image above shows how SnapLogic provides four options which can be run inside:

- Windows Server

- Linux Distro

- RPM Package

- Docker Image

The formats available are designed for portability as well as minimal infrastructure requirements to run the node. The image/package is lightweight and can utilize a number of package management softwares to take advantage of auto-scaling and load-balancing in a Kubernetes environment. The “Configuration” is the instruction that establishes the connectivity between the Groundplex (runtime) and the Control Plane (Design Surface) and is discussed next.

The SnapLogic Platform is an iPaaS running in the cloud and will require always-on connectivity as it retrieves the latest instruction metadata required for execution.

The Network Requirements

The node is a runtime. It runs the integration pipelines, scheduled tasks, API triggered tasks which are stored as metadata in the Control Plane in the cloud. The Connection data shown above is the instruction for the node to “call home” when it starts up. Here is where it gets a bit more technical.

The First Call Home

At startup, the node will load the Connection parameters and establish a connection with the Control Plane. As of today, the CP resides in the West Region in the US with possible plans for expansion into the EMEA and EU regions.

The first order of business for the JCC is to register with the Control Plane using the “slproz” file downloaded onto the JCC via the configuration string shown above. The registration process entails key exchanges, machine config parameters including the Snaplex Node Type as well as TLS certificates. Once the JCC is found in compliance, or not, the status is communicated back to the Dashboard so the user can be notified.

Throughput, Connection Cost, and Proxies

The connection is made using an HTTP Library and the default setup is for the node to check in every 15 minutes for any updates from the Node. This is done right after registration where the Control Panel signs the TLS certificate with the organization-specific key created at the time the organization was created. There is a cost to TCP connections as TCP can be very “chatty” locking up resources on the endpoint in the Control Plane. Therefore, timeout values are configured to close the connection once the conversation is over.

In most configurations, and unless the SnapLogic APIM feature is enabled, the JCC Groundplex is behind a proxy. The proxy servers serve many functions including “hiding” the endpoint from the public internet, applying policies, and throttling connections. SnapLogic periodically checks the health of the connections to ensure proper opening and closure of the connection.

Websockets and Bi-directional Communications

As the JCC does not allow for inbound calls, and once the connection between the Node and the Control Plane is established, a WebSocket connection is made from the Control Plane using the Python Async library to exchange data in a bidirectional manner. Using the WebSocket protocol is necessary because the Node and the Control Plane must remain in constant communication as the Node does not keep state information, i.e. there is no mechanism on the Node to store any execution data, therefore, the Node is unaware of the general state of pipelines or parallel executions, etc. Additionally, as users might be updating the pipelines or snaps or any other assets in the SnapLogic platform, and as that data is cached in the node, an open socket connection has to persist in order to ensure the pipeline metadata is not stale and reflects all the most up-to-date changes.

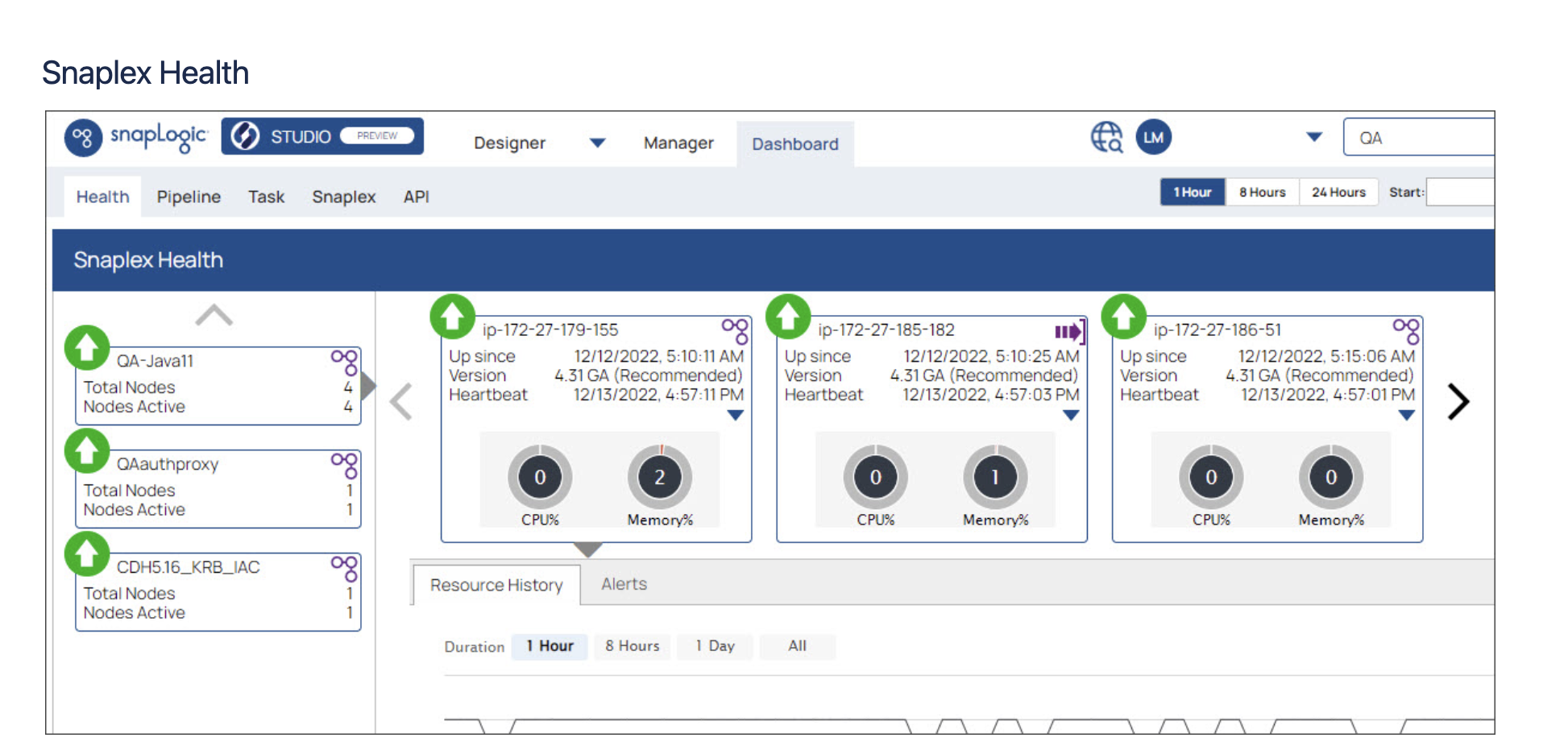

Staying Alive and Checking on Neighbors

In addition to “checking in” with the Control Plane, part of the JCC network life is awareness of its neighbors. There are various “heartbeat” sequences that report back to the Control Plane of various stats such as CPU, Memory and Disk. Restart requests, new WAR versions, and encryption keys for storage, are part of the constant data exchange during the life of the node. These are all part of a Monitor service that tracks the health of the node and restarts it to prevent lockups or freezes. It also tracks and keeps a neighbors list where it is checked to ensure that other nodes are participating in the distribution of pipeline executions amongst nodes.

Status updates are sent every 20 seconds to the Control Plane from every node. The data communicates the health of the node, telemetry signals such as CPU utilization, memory usage, and other performance and system health data as well as pipeline execution distribution based on overall system utilization.

Load Balancing

The Control Plane controls the load balancing function for pipeline execution, however, to achieve load balancing across nodes the required approach is for a load balancer to direct traffic amongst the nodes to achieve high availability and failover.

The monitor process within the JCC will constantly check the CPU and memory utilization for a certain threshold (85% for CPU, 95% for memory with a feature flag setting). This ensures that the load is spread evenly across the nodes.

In summary, the SnapLogic Groundplex JCC Node is a runtime that enables the execution of integration pipelines and tasks within the SnapLogic platform, which can be run on-premises in a data center or within a private virtual network in the cloud. The node is a lightweight package that can be run on various operating systems and can be managed using package management software for auto-scaling and load-balancing. The node establishes a connection with the SnapLogic Control Plane, where pipeline instructions are stored, through a configuration process that includes key exchanges and the exchange of TLS certificates. The connection is maintained through regular check-ins, with the frequency and duration determined by timeout values. The node may be behind a proxy server in most configurations, and the use of the SnapLogic APIM feature can enable the node to communicate directly with the Control Plane.