What happens when you’re faced with the challenge of maintaining a legacy data warehouse while dealing with ever-increasing volumes, varieties and velocities of data?

What happens when you’re faced with the challenge of maintaining a legacy data warehouse while dealing with ever-increasing volumes, varieties and velocities of data?

While powerhouses in the days of structured data, legacy data warehouses commonly consisted of RDMS technologies from the likes of Oracle, IBM, Microsoft, and Teradata. Data was extracted, transformed and loaded into data marts or the enterprise data warehouse with traditional ETL tools, built to handle batch-oriented use cases, running on expensive, multi-core servers. In the era of self-service and big data, there’s a rethinking of these technologies and approaches going on in enterprise IT.

In response to these challenges, companies are steadfastly moving to modern data management solutions that consist of NoSQL databases like MongoDB, Hadoop distributions from vendors like Cloudera and Hortonworks, cloud-based systems like Amazon Redshift, and data visualization from Tableau and others. Along this big data and cloud data warehouse journey, many people I speak with have realized that it’s vital to not only modernize their data warehouse implementations, but to future-proof how they collect and drive data into their new analytics infrastructures – requiring an agile, multi-point data integration solution that is both seamless and capable of dealing with structured and unstructured streaming real-time and batch-oriented data. As companies reposition IT and the analytics infrastructure from back-end infrastructure management cost centers to an end-to-end partner of the business, service models become an integral part of both the IT and business roadmaps.

The Flexibility, Power and Agility Required for the New Data Warehouse

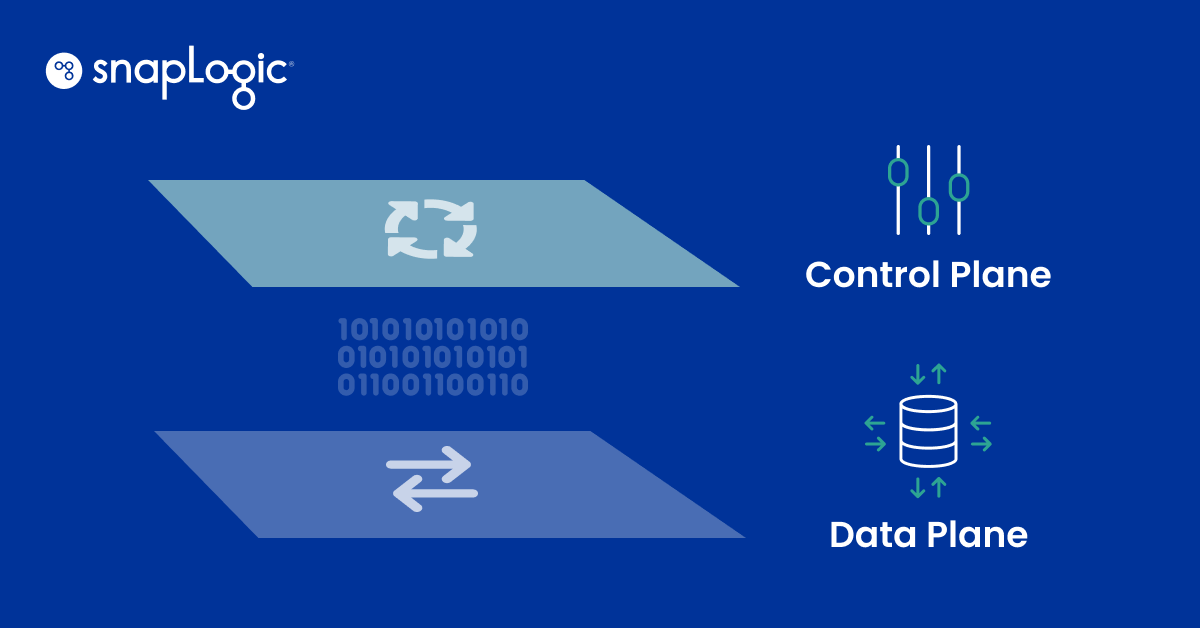

In most enterprises today, IT’s focus is moving away from using its valuable resources for undifferentiated-heavy lifting and more into delivering differentiating business value. By using SnapLogic to move big data integration management closer to the edge, resources can be freed up for more value-based projects and tasks while streamlining and accelerating the entire data-to-insights process. SnapLogic’s drag-and-drop data pipeline builder and streaming integration platform removes the burden and complexity of data ingestion into systems like Hadoop, transforming data integration from a rigid, time-consuming process into a process that is more end-user managed and controlled.

Productivity Gains with Faster Data Integration

A faster approach to data integration not only boosts productivity, but in many cases results in substantive cost savings. In one case, a SnapLogic customer with over 200 integration interfaces, managed and supported by a team of 12, was able to reduce their integration management footprint down to less than 2 FTEs, realizing an annual hard cost savings of more than 62% annually, 8:1 annual FTE improvement, infrastructure savings of over 50% and a 35% improvement in their dev-ops release schedule. Ancillary effects with that same customer realized a net productivity gain and increased speed to market by transferring ownership of their Hadoop data ingest process to data scientists and marketers. This shift resulted in the company being more responsive while significantly streamlining their entire data insights process for faster, cheaper and better decision-making across their entire enterprise.

More Agile Company

Increased integration agility means having the ability to make faster, better and cheaper moves and changes. SnapLogic’s modular design allows data scientists and marketers to be light on their feet, making adds, moves and changes in a snap with the assurance they require as new ideas arise and new flavors of data sources enter the picture.

By integrating with Hadoop through the SnapLogic Enterprise Integration Platform, with fast, modern and multi-point data integration, customers have the ability to seamlessly connect to and stream data from virtually any endpoint, whether cloud-based, ground-based, legacy, structured or unstructured. In addition, by simplifying the data integration, SnapLogic customers no longer need to use valuable IT resources to manage and maintain data pipelines, freeing them to contribute to areas of more business value.

Randy Hamilton is a Silicon Valley entrepreneur and technologist who writes periodically about industry related topics including the cloud, big data and IoT. Randy has held positions as Instructor (Open Distributed Systems) at UC Santa Cruz and has enjoyed positions at Basho (Riak NoSQL database), Sun Microsystems, and Outlook Ventures, as well as being one of the founding members and VP Engineering at Match.com.

Next Steps:

- Read the whitepaper: The Death of Traditional Data Integration

- Contact Us to discuss your cloud and big data integration requirements