We’ve now established the architectural blueprint (Parts 1 and 2), the operating model (Part 3), and the core principle of containing variability (Part 4). The next challenge is organizational: ensuring that agent velocity and autonomy do not lead to a loss of control, an explosion of risk, or a self-inflicted production incident. Intelligence without coordination is unstable.

This part of the conversation is about building a scalable system of governance that codifies what is “allowed execution,” making the path of least resistance the path of greatest security and auditability. It is the platform layer that turns agent enthusiasm into enterprise durability.

The self-destructive arc of agent sprawl

By now, we’ve established that the shift from AI-as-advisor to AI-as-actor fundamentally changes your architecture. But this brings a predictable and dangerous consequence.

Every agent program that finds early success follows this common, self-destructive arc:

- A single team ships a useful automated workflow

- Other teams copy the underlying tool or integration

- A marketplace for these tools emerges within the organization

- Everyone rushes to publish MCP tools

- Duplicate capabilities (with slight variations) multiply exponentially

- Credentials and access scopes become fragmented and messy

- Uncontrolled tool calls spike, inflating costs and load

- Ownership and accountability for execution blur

- The first major incident occurs due to an uncontrolled action

- The organization reacts by locking everything down, stalling the entire program

This arc is not a feature of AI; it is the inevitable result of scaling execution without an operating model. The enterprise doesn’t need more tools; it needs governance that is engineered to scale. It needs a platform where doing the right, safe, and auditable thing is easier and cheaper than doing the wrong thing.

Start with the core system of record: the capability catalog

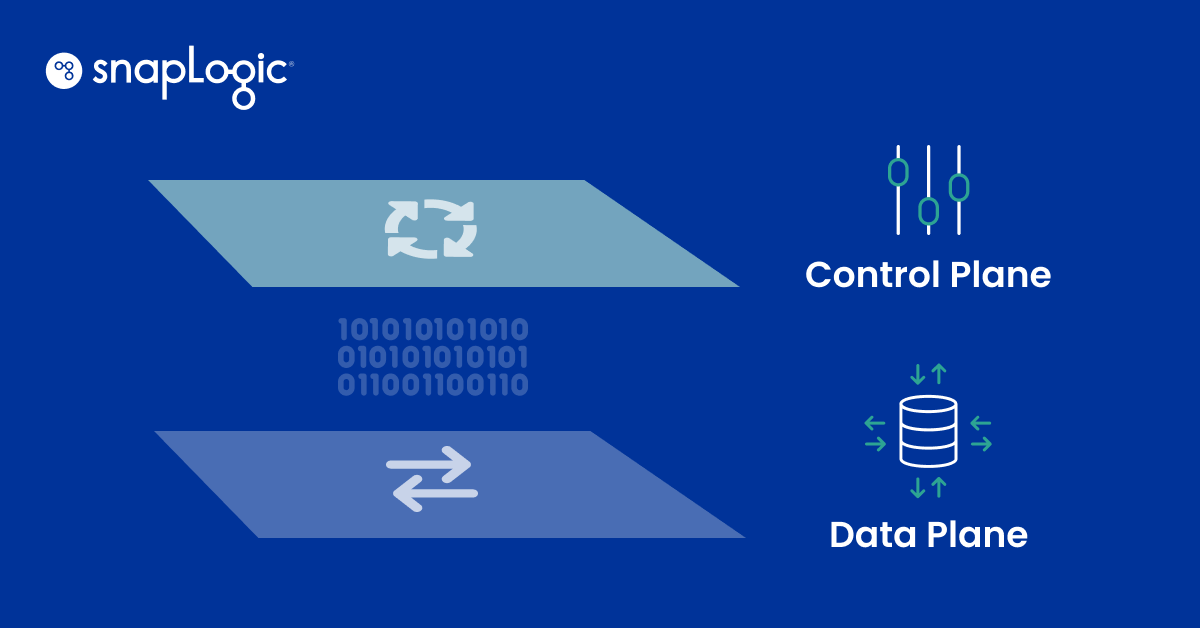

If you do not have a capability catalog, you do not have a control plane — you have a pile of uncoordinated integrations. This catalog must be the living system of record for all “allowed execution.”

It is how you answer the most critical questions: “Do we already have this?” and “Is it safe to use?”

A production-grade catalog must enforce the following metadata:

- Capability name and business intent: what the business thinks is happening (e.g., “ProvisionAccess”), not the technical API being called

- Owner team and on-call rotation: clear, mandatory accountability for maintenance and failure

- Tier (0–3): the risk-level designation that governs policy application

- Systems and data classes touched: explicitly defining the execution blast radius (e.g., ERP, PII, Regulated Data).

- Version and deprecation date (mandatory): ensuring the ecosystem moves to new standards and is clean of legacy risk

- Approval policy and thresholds: defining when human oversight is required

- SLOs and observability links: linking the capability to its operational reality

The tiered model for certification

The binary “experimental vs. production” model fails immediately with agents because risk is not binary. The control plane must enforce a tiered certification model aligned to the potential blast radius:

- Tier 0 (safe context): read-only retrieval, summarization, strict masking, and output validation (High velocity, low risk)

- Tier 1 (reversible): bounded actions and deterministic workflows with full, clear traceability. (Actions that can be undone or compensated.)

- Tier 2 (high-impact): financial, identity, or production changes; requires central approvals, step-up authentication, rollback plans, and immutable decision records

- Tier 3 (regulated/irreversible): deletions, terminations, or export of regulated datasets; requires separation of duties, replay testing, and the strictest change management

Certification must be mechanical. Teams must know the exact requirements to promote a capability, ensuring risk controls are enforced, not debated.

FinOps: governing the true drivers of spend

The biggest surprise in agentic execution is that the real cost is not model tokens, but the operational fallout from ungoverned action. The control plane must track and enforce against the true spend drivers:

- Tool/API fan-out: uncontrolled agents making multiple, overlapping downstream calls for a single intent

- Retries and timeouts: The Retry Amplification Factor must be tracked, as unbounded retries during an outage can cause costs to spike and overload systems of record

- Human review load: the cost of approvals, exceptions, and escalations, which can exceed the cost of the automation itself

Governance manages the AI control plane through key metrics, such as cost per governed action and cost per successful outcome. It protects the budget from execution volatility by implementing spend ceilings and throttles based on capability tier.

Action supply chain and enforcement

In an agent ecosystem, third-party MCP servers and vendors become execution surfaces, thereby changing outcomes. Your rule must be: Agents don’t call vendors; agents call capabilities.

The vendor logic sits behind the capability boundary, where the control plane enforces security and quality gates:

- Validation and schemas

- Policy and approvals

- Audit logging and deterministic failure modes

- Versioning and deprecation

This framework is the foundation of the AI control plane’s golden rule: Decentralize capability creation, centralize enforcement. The small platform function owns the publishing standards, policy enforcement primitives, certification gates, and kill switches. This approach ensures you enable contribution while preventing chaos.

Book a demo today and start enforcing proper governance for agentic sprawl.

Explore the AI control plane series

Part 1: Middleware is the new control plane for AI

Understand how MCP reshapes enterprise architecture and collapses the distance between intent and action.

Part 2: What a real AI control plane looks like before MCP sprawl sets in

Learn the execution primitives, governance, and oversight that ensure autonomous systems run safely and predictably.

Part 3: How to run the AI control plane without turning autonomy into chaos

This post provides a practical operating model for scaling AI agents, managing risk, and building trust in production.

Part 4: Making Trust Visible: The Foundation for Agentic Scale

How to build a trust fabric through capabilities, auditable decision records, and tiered control to safely govern AI agents in the enterprise.

Part 5: The Governance Engine: How Enterprises Maintain Control Over Agentic AI

Explore building a scalable governance system and capability catalog to maintain control, auditability, and durability over autonomous AI agents.

Part 6: The Enterprise Rollout: Hybrid Execution and the Path to Operational AI

Understand the migration path and architectural pattern of centralized governance with distributed execution to achieve operational AI across hybrid enterprise environments.